May  2018

2018

The Sum of Its Parts

By Sandra Nunn, MA, RHIA, CHP

For The Record

Vol. 30 No. 5 P. 16

Data aggregation forms the backbone of successful analytics efforts.

AHIMA's House of Delegates recommends that state associations align themselves with other information management professional organizations. One of the most valuable of these is HIMSS. Like AHIMA, it offers a variety of webinars to support member learning. A recent offering focused on data aggregation, which coincidentally touched on virtually every topic the Journal of AHIMA mentioned as being the most significant of 2018. These topics include the following:

• data analytics;

• informatics;

• information governance;

• education and workforce;

• clinical documentation improvement;

• inpatient and outpatient coding;

• privacy and security; and

• rules and regulations.

After reviewing the synopses of these topics and AHIMA's reasoning for their importance, it became apparent that data aggregation enables the successful execution of all of the key HIM issues that figure to make noise in 2018.

Joseph Nichols, MD, principal of Health Data Consulting and host of the webinar "Data Aggregation: The Forgotten Key to Analytics," understands the infrastructure required to achieve HIM goals, all of which rely on high-quality information.

Nichols says there's been a "change in health care focus, switching from the volume of services to the value created for the patients." Rather than looking at what is adequate reimbursement for health care, "We need to know what adds value," he says, noting that quality data are key to determining costs per patient and the amount of money being wasted.

Nichols, who's experienced HIT from the provider, payer, government, and vendor sides, worked with the Centers for Medicare & Medicaid Services (CMS) subcontracting on rules and policy development. "All policy should come from good data," he says.

Health Data Consulting, which focuses on clinical documentation improvement, is usually sought out by clients who are vaguely aware that they have a problem that may be "getting our docs to document better" or "getting better results from queries submitted to data warehouses," Nichols says.

In the webinar, Nichols stressed that data aggregation is at "the heart of policies, rules, edits, and analytics." Once systems can guarantee "accurate, complete, and consistent documentation" and coders incorporate "well-defined standards, sound implementations, and robust concept support," data aggregation can occur in multiple systems based on "clear definitions, normalization, and accurate inclusions and exclusions."

Nichols says health care organizations must be clear about how they plan to use data aggregation in order to select the most appropriate project team members. Typically, however, projects include team members from the following domains:

• clinical;

• financial;

• coding;

• data;

• technical; and

• compliance.

In his book The Fifth Discipline: The Art & Practice of the Learning Organization, Peter Senge, PhD, stresses the importance of organizations coming to a shared vision. "A vision is truly shared when you and I have a similar picture and are committed to one another having it, not just each of us, individually, having it," he wrote.

Senge contends that "shared visions derive their power from a common caring." In health care, "common caring" means that team members strive to generate the best possible data for the best possible patient care and are willing to come to mutually identified and defined concepts. In the case of data aggregation, health care team members work with the consulting group to reach an agreement on the definition of the concept.

In a cogent example, Nichols discussed the concept of "burns" as part of the 150 categories of injury types. The health care team can be taught to determine what will be "in" from a category (the types of data that will be pulled from the designated systems) and what will be "out," ie, the data from the systems that will be excluded in the returned results.

For burns, the team decided to include burns resulting from fire and toxic materials but to exclude items such as sunburn and rug burns. As a result, when a staff member searches for burns, only the codes and information pertinent to traumatic injuries are returned.

Part of the process is a review of "fake positives" and "fake negatives" returned from test searches that must be weeded out to ensure future search results are clean and reliable. When the team agrees on a concept, the decision generates a policy that is documented and tagged. All queries used for testing are retained.

This ever-evolving process generates a database with a series of files to which items are mapped. For example, a concept is created and defined and then staff can map the appropriate codes to the concept through ICD-10, SNOMED CT, and other terminologies.

"Concepts are the basis of all aggregation," Nichols says, offering the following key definitions:

• Concept: a thought that we have in our brain;

• Term: a word in an expression (one of many) that we might use to communicate concepts to others; and

• Code: a shorthand set of characters that may represent one or more concepts.

Take the concept of "cow," for example. Nichols says this idea can be expressed in multiple terms, among them bovine, vaca, beef, steer, and doggie.

When health care professionals need to aggregate data organized in hierarchical systems such as taxonomies, they must be able to map among various databases to gain a complete picture of what they are seeking. An ontology, which Nichols defines as "the conceptualization of concepts," is a "data structure that defines unique concepts and maps those concepts to a variety of expressions (terms), including different code standards."

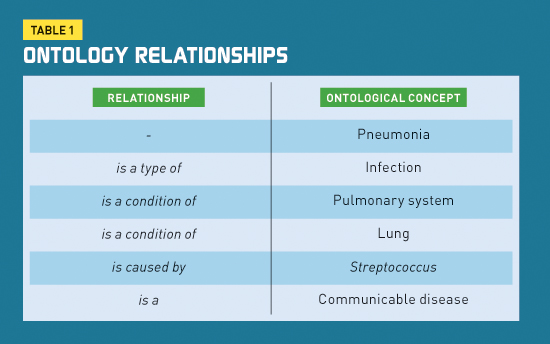

An ontology offers the "ability to categorize based on a limitless number of concept relationships as expressed in metadata tags," Nichols says. Take streptococcal pneumonia, for example. Data seekers can gain information from several relationships (see Table 1).

In order to institutionalize a careful determination of concepts, there must be a credible organizational community that shares a vision of high integrity and fully defined concepts and is willing to sustain the effort going forward to gain the value from sound informational results. "High-quality, reliable data can change your business," Nichols notes.

According to GeekInterview.com, "Statistics have shown that 90% of all business reports contain aggregate information, making it essential to have proactive implementation of data aggregation solutions so that the data warehouse can substantially generate data for significant performance benefits and subsequently open many opportunities for the company to have enhanced analysis and reporting capabilities."

External Data Aggregation

The need to aggregate data from different health care entities to make health care decisions is no more important than in public health. The role of data aggregation in disease tracking, data mining, and patient monitoring for distance health cannot be overstated.

HIM professionals continue to migrate into career paths that involve management of public health databases and registries of all kinds, including patient, immunization, cancer, and trauma. In the Journal of AHIMA article "HIM's Role in Disease Tracking, Data Mining, and Patient Monitoring," author Mary Butler points out that "researchers at HealthMap collect data from social media, online news aggregators, and Twitter chats, along with public health reports, to provide a comprehensive view of the current global state of infectious diseases."

HealthMap describes itself as bringing "together disparate data sources to achieve a unified and comprehensive view of the current global state of infectious diseases." The role of good data aggregation is obvious. Epidemiologists are looking to new data sources such as Twitter messages, airline data, emergency calls, and other immediately available health information to help them predict the spread of disease in real time.

The data collected from these new sources can be used to make valid decisions only if the participants agree on a definition of the concepts that can be derived from such diverse sources.

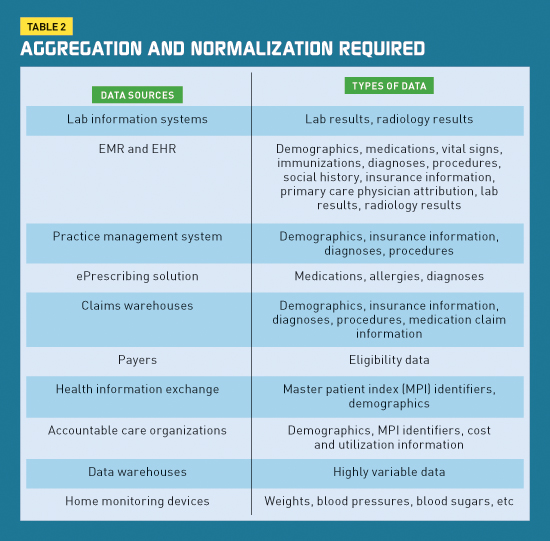

In a 2012 blog entry, Phillip Burgher, director of integration services for Philips Wellcentive, says that "responsible health management depends on having a comprehensive view of the patient's overall health; data accuracy relies heavily on data aggregation and normalization."

In Table 2, Burgher lists several data sources and types that require aggregation and normalization.

Burgher stresses that data aggregator tools must remain agnostic with respect to aggregation; they cannot be too closely associated to any one product to ensure they can aggregate from multiple sources.

Data Aggregation Challenges

Similar to Nichols, Burgher says the best way for data to remain relevant, adaptable, and current is to use HIT standards—both for data format and coding.

At the 2018 AHIMA national convention, standards development held a strong presence, demonstrated most notably by a showcase devoted to AHIMA's work in the field. As such, AHIMA has promoted the potential for HIM career growth in the standards development domain. Without strong standards development on all health care fronts, neither good data aggregation nor reliable interoperability are possible.

Because results fed into a population health management system may be duplicative and redundant, normalization is necessary in data aggregation. If a system receives lab results using LOINC encoding and is subsequently sent the same results unencoded from the EHR, data normalization will prevent the duplicative information and facilitate accurate clinical quality calculations.

In addition to the duplicate result problem, the age-old dilemma of poorly maintained master patient indices can rear its head. A single patient in one system with multiple identifiers will corrupt the data in aggregated summaries.

Another historical problem is the difficulty with interfaces among systems—the greater number of interfaces, the more challenging and expensive it will be to aggregate accurately from several sources into a single format that can be queried, alerted, and reported. With multiple feeder systems, the issue of keeping the systems in sync as upgrades are applied to the feeder systems becomes more challenging for IT staff.

New Data Aggregation Frontiers

Quality management and quality measures have been around the HIM world since its founding. However, the old manual abstraction processes to collect data and couple it with claims-based data are increasingly defunct. In an article in the February 2017 Journal of AHIMA titled "Transitioning to Electronic Clinical Quality Measures in the Informatics Era," Shannon H. Houser, PhD, MPH, RHIA, FAHIMA, and Jean M. "Ginny" Meadows, BS, RN, FHIMSS, provide HIM professionals guidance on their changing roles regarding the collection and aggregation of data.

Newly mandated electronic clinical quality measures (eCQMs) are "clinical quality measures that use structured data found in EHRs or other HIT to measure the quality of care provided to patients." eCQMs were first introduced in the rulemaking process for meaningful use stage 1. Now eCQMS have an expanded use in several CMS programs.

The Healthcare Quality Measure Format allows for electronic specifications for each eCQM. "It is essential for health care organizations, especially HIM professionals, to design standardized data collection tools, establish policy infrastructure of data capturing, data aggregation, data management, reporting, and strong governance practices to facilitate and support the transition to eCQMs," Houser and Meadows wrote.

Indeed, the increasing number of HIM professionals moving into data analytics and informatics will need to master data aggregation methods and knowledge as part of their career toolkits.

— Sandra Nunn, MA, RHIA, CHP, is a contributing editor at For The Record and principal of KAMC Consulting in Albuquerque, New Mexico.